“Close-up of Caucasian mother and baby girl sitting at windowsill and reading book. Young woman educating daughter at home”

1Huazhong University of Science and Technology, 2Alibaba Group, 3Zhejiang University, 4Ant Group

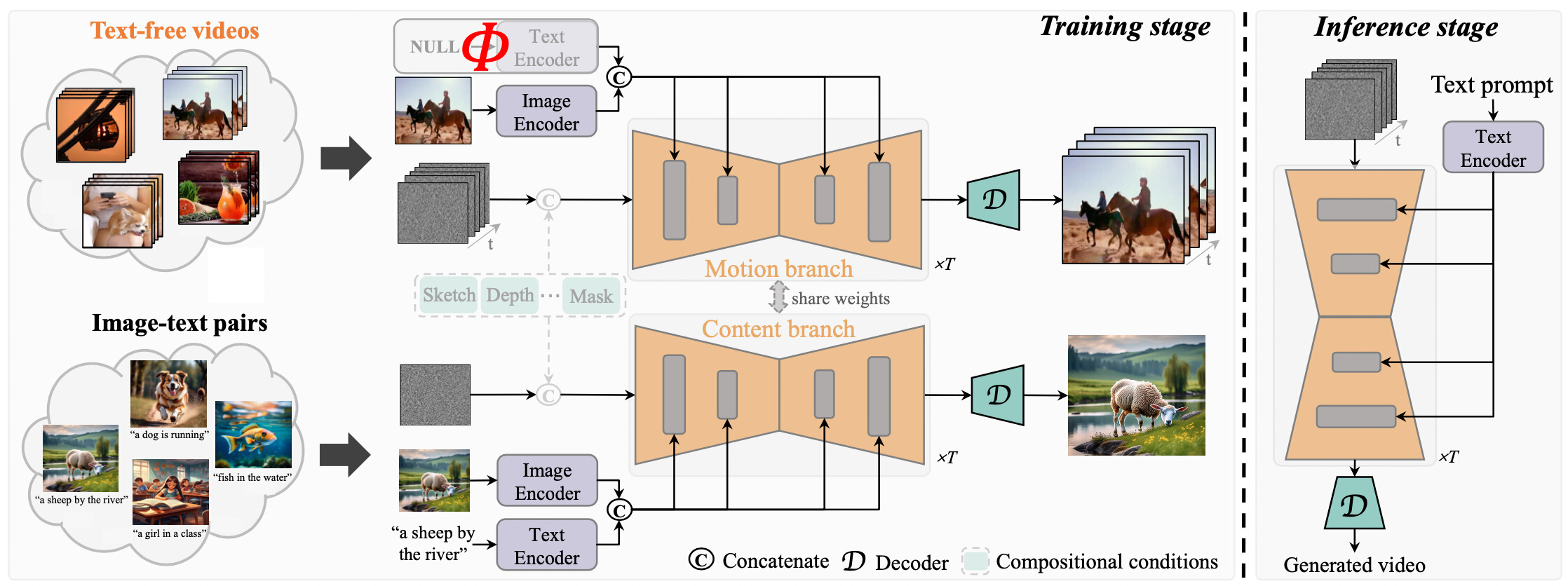

Diffusion-based text-to-video generation has witnessed impressive progress in the past year yet still falls behind text-to-image generation. One of the key reasons is the limited scale of publicly available data (e.g., 10M video- text pairs in WebVid10M vs. 5B image-text pairs in LAION), considering the high cost of video captioning. Instead, it could be far easier to collect unlabeled clips from video platforms like YouTube. Motivated by this, we come up with a novel text-to-video generation framework, termed TF-T2V, which can directly learn with text-free videos. The rationale behind is to separate the process of text decoding from that of temporal modeling. To this end, we employ a content branch and a motion branch, which are jointly optimized with weights shared. Following such a pipeline, we study the effect of doubling the scale of training set (i.e., video-only WebVid10M) with some randomly collected text-free videos and are encouraged to observe the performance improvement (FID from 9.67 to 8.19 and FVD from 484 to 441), demonstrating the scalability of our approach. We also find that our model could enjoy sustainable performance gain (FID from 8.19 to 7.64 and FVD from 441 to 366) after reintroducing some text labels for training. Finally, we validate the effectiveness and generalizability of our ideology on both native text-to-video generation and compositional video synthesis paradigms. Code and models will be made public here.

“Charming young girl with flowers.”

"Close up of a young girl drawing on a chalkboard"

"waking in the jungle"

"Student in eyeglasses laughing at camera. Businessman posing at camera outdoors"

“Black woman, skincare and glow with smile while hand touch shoulder to feel smooth texture. Model, happy and skin with soft, healthy and wellness for portrait with beauty, cosmetics and dermatology”

"Young african american woman talking on the smartphone and using credit card at street"

"Close up to male face of young farmer examining his golden wheat field. Young agronomist standing on barley meadow and enjoying his cereal plantation. Concept of agricultural business. Slow motion"

"Close up portrait A beautiful young girl in a white T-shirt is chatting in social networks on her smartphone while sitting on a bench in a park"

“Casual Joyful South American man checking smartphone walking in city street in daylight. Smiling hispanic person using phone”

"A young spotted hyena at its den, Kruger National Park, South Africa"

"Working from home - chroma key screen on the laptop"

"Executives discussing over laptop 4k"

“Blue 3D numbers growing up”

“Absorbed African American happy man reading book lying on bed at home indoors. Portrait of smart confident relaxed guy enjoying hobby in the evening in bedroom. Lifestyle and joy concept”

“A model with long hair, 30-35 years old, wearing a hat and sunglasses.”

“Young blonde woman with serious expression standing at street”

“Close-up of chess on a black background. Wooden chess pieces. Concept: the Board game and the intellectual activities”

“CU Owl perching on stares tree / Winnipeg, Manitoba, Canada”

“A dog is running away from the camera”

“A man is running from right to left”

“Beautiful peonies are blooming on a black background”

“A manor, a rotating view”

1Huazhong University of Science and Technology, 2Alibaba Group, 3Shanghai Jiao Tong University,

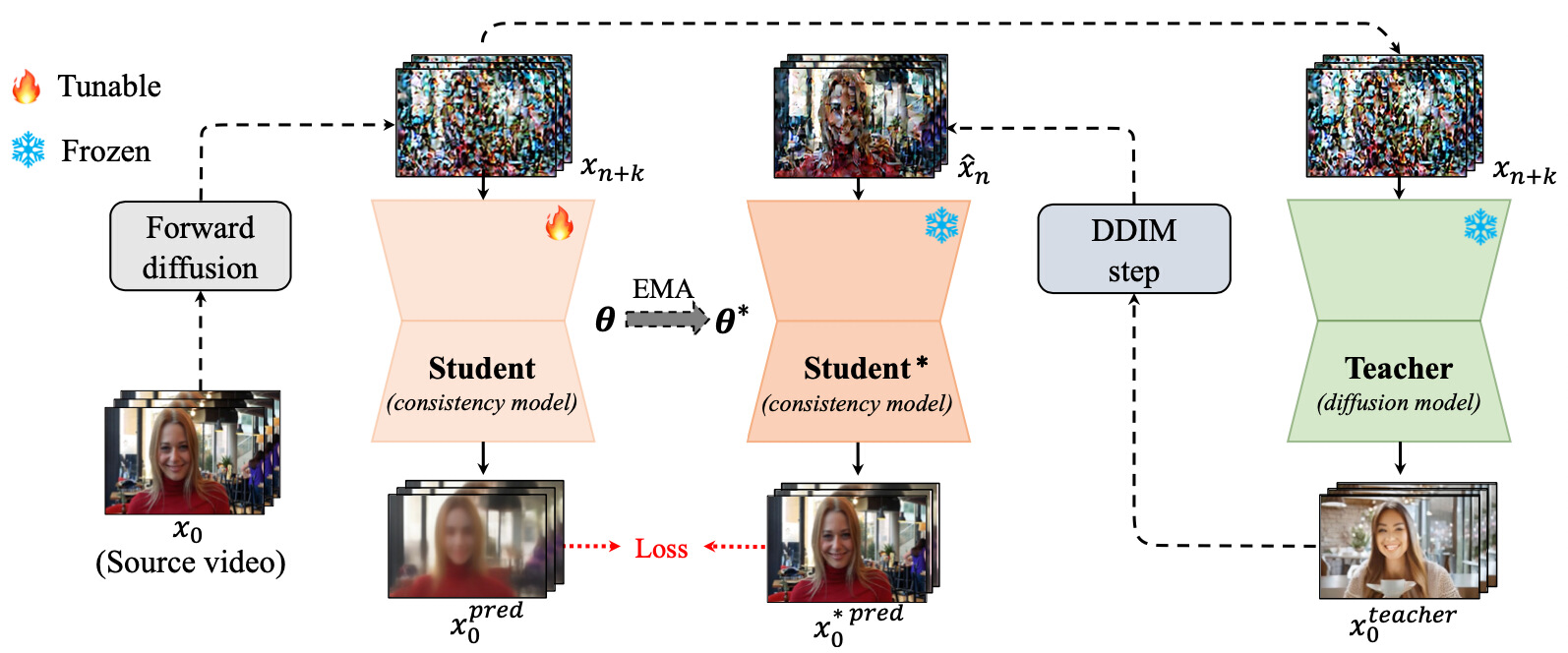

Consistency models have demonstrated powerful capability in efficient image generation and allowed synthesis within a few sampling steps, alleviating the high computational cost in diffusion models. However, consistency model in the more challenging and resource-consuming video generation is still less-explored. In this report, we present the VideoLCM framework to fulfill this gap, which leverages the concept of consistency models from image generation to efficiently synthesize videos with minimal steps while maintaining high quality. VideoLCM builds upon existing latent video diffusion models and incorporates distillation techniques for training the latent consistency model. Experimental results reveal the effectiveness of our VideoLCM in terms of computational efficiency, fidelity and temporal consistency. Notably, VideoLCM achieves high-fidelity and smooth video synthesis with only 4 sampling steps, showcasing the potential for real-time synthesis. We hope that VideoLCM can serve as a simple yet effective baseline for subsequent research work.

“Beef burger in close up”

"Young woman using a laptop while working at home"

"Rocks Splash Ocean Close Up (HD)"

"girl paints eyes with mascara"

“Monarch butterfly drinking nectar”

"Fruitfully young red grapes hanging on vineyard, hanging on a bush in a beautiful sunny day."

"instant noodle spicy salad with pork onplate - Asian food style"

"Beautiful woman playing with her hair"

“Portrait of infant boy looking to camera”

"Man wearing protective suit showing palms with inscription"

"Pleased brunette woman talking by smartphone and looking around"

"A girl is standing in smoke holding a mask in her hand. White smoke."

@article{TFT2V,

title={A Recipe for Scaling up Text-to-Video Generation with Text-free Videos},

author={Wang, Xiang and Zhang, Shiwei and Yuan, Hangjie and Qing, Zhiwu and Gong, Biao and Zhang, Yingya and Shen, Yujun and Gao, Changxin and Sang, Nong},

journal={arXiv preprint arXiv:2312.15770},

year={2023}

}

@article{wang2023videolcm,

title={Videolcm: Video latent consistency model},

author={Wang, Xiang and Zhang, Shiwei and Zhang, Han and Liu, Yu and Zhang, Yingya and Gao, Changxin and Sang, Nong},

journal={arXiv preprint arXiv:2312.09109},

year={2023}

}

@article{videocomposer,

title={VideoComposer: Compositional Video Synthesis with Motion Controllability},

author={Wang, Xiang and Yuan, Hangjie and Zhang, Shiwei and Chen, Dayou and Wang, Jiuniu and Zhang, Yingya and Shen, Yujun and Zhao, Deli and Zhou, Jingren},

journal={NeurIPS},

year={2023}

}